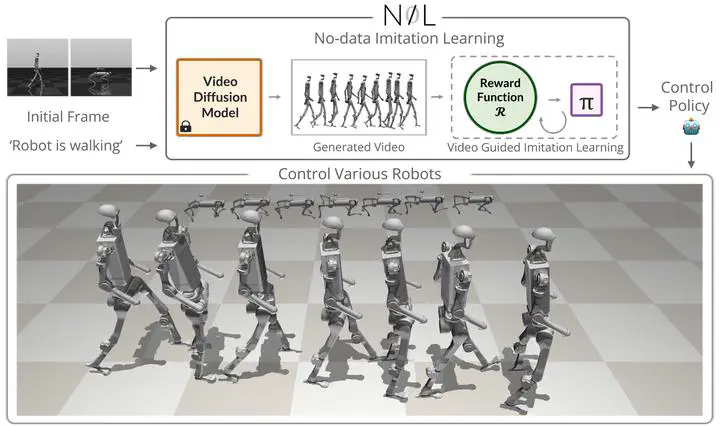

NIL Overview: from single frame + prompt → generated video → policy learning.

NIL Overview: from single frame + prompt → generated video → policy learning.

Type

Publication

In International Conference on Learning Representations (ICLR) 2025

Abstract

Acquiring physically plausible motor skills across diverse and unconventional morphologies—from humanoids to ants—is crucial for robotics and simulation.

We introduce No-data Imitation Learning (NIL), which:

- Generates a reference video with a pretrained video diffusion model from a single simulation frame + text prompt.

- Learns a policy in simulation by comparing rendered agent videos against the generated reference via video-encoder embeddings and segmentation-mask IoU.

NIL matches or outperforms baselines trained on real motion‐capture data, effectively replacing expensive data collection with generative video priors.

Teaser

NIL generates expert videos on-the-fly via a pretrained video diffusion model

and then trains policies purely from those generated 2D videos—no human data required.

NIL Overview

**Stage 1:** Generate reference video Fᵢⱼ with diffusion model D from initial frame e₀ and prompt pᵢⱼ.

**Stage 2:** Train policy πᵢⱼ in physics simulator to imitate Fᵢⱼ using (1) video‐encoder similarity, (2) segmentation IoU, (3) smoothness regularization.

**Stage 2:** Train policy πᵢⱼ in physics simulator to imitate Fᵢⱼ using (1) video‐encoder similarity, (2) segmentation IoU, (3) smoothness regularization.

Experiments

We validate NIL on locomotion tasks for multiple morphologies (humanoids, quadrupeds, animals).

- Reward components: ablation of video vs. mask vs. reg.

- Policy performance: matches or exceeds motion-capture-trained baselines.

- Generalization: works zero-shot on unseen morphologies.

BibTeX

@article{albaba2025nil,

title = {NIL: No-data Imitation Learning by Leveraging Pre-trained Video Diffusion Models},

author = {Albaba, Mert and Li, Chenhao and Diomataris, Markos and Taheri, Omid and Krause, Andreas and Black, Michael},

journal = {arXiv},

year = {2025},

}