Teaser: HaPTIC Framework Overview

Teaser: HaPTIC Framework Overview

Abstract

We present HaPTIC, an approach that infers coherent 4D hand trajectories from monocular videos. Current video-based hand pose reconstruction methods primarily focus on improving frame-wise 3D pose using adjacent frames rather than studying consistent 4D hand trajectories in space. Despite the additional temporal cues, they generally underperform compared to image-based methods due to the scarcity of annotated video data. To address these issues, we repurpose a state-of-the-art image-based transformer to take in multiple frames and directly predict a coherent trajectory. We introduce two types of lightweight attention layers: cross-view self-attention to fuse temporal information, and global cross-attention to bring in larger spatial context. Our method infers 4D hand trajectories similar to the ground truth while maintaining strong 2D reprojection alignment. We apply the method to both egocentric and allocentric videos. It significantly outperforms existing methods in global trajectory accuracy while being comparable to the state-of-the-art in single-image pose estimation.

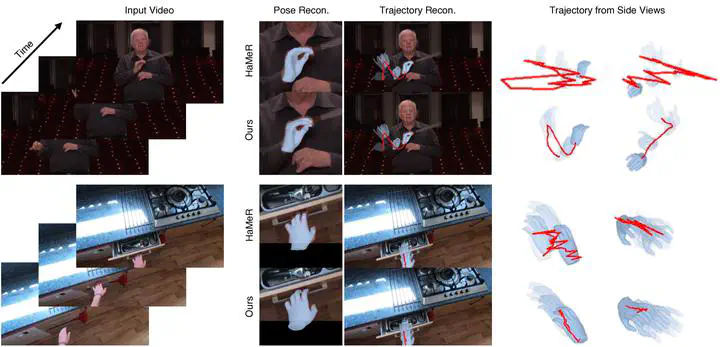

Teaser

Method Overview

Overall pipeline (left): HaPTIC extends HaMeR by predicting both per-frame MANO parameters and a global 4D trajectory via a shared transformer.

Inside one image tower (right): We inject cross-view self-attention to fuse temporal frames and global cross-attention for spatial context.

Comparison with Baselines

The de-facto “lifting” methods suffer from jitter; metric‐depth estimators struggle under occlusion; whole-body models fail when large parts of the hand are out of view. HaPTIC delivers smooth, globally-consistent trajectories while matching 2D reprojection quality.

Test-Time Optimization

Can a simple test-time refinement smooth out jagged feed-forward predictions? We find that while optimization can reduce local jitter, it’s much less effective at correcting global drift—HaPTIC provides a superior initialization.

More Results

BibTeX

@article{ye2025predicting,

author = {Ye, Yufei and Feng, Yao and Taheri, Omid and Feng, Haiwen and Tulsiani, Shubham and Black, Michael J.},

title = {Predicting 4D Hand Trajectory from Monocular Videos},

journal = {arXiv preprint arXiv:2501.08329},

year = {2025},

doi = {10.48550/arXiv.2501.08329},

}