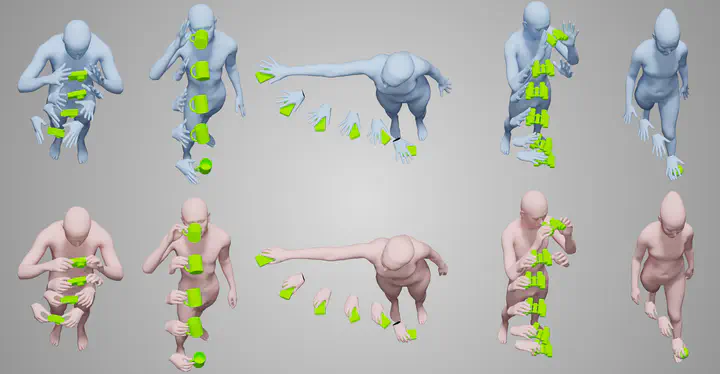

GRIP: Generating Interaction Poses Conditioned on Object and Body Motion

Abstract

Hands are dexterous and highly versatile manipulators, that are central to how humans interact with objects and their environment. Consequently, modeling realistic hand object interactions, including the subtle motion of individual fingers, is critical for applications in computer graphics, computer vision, and mixed reality. To address this challenge, we propose GRIP, a learning-based method that synthesizes the motion of both left and right hands before, during, and after interaction with objects. We leverage the dynamics between the body and the object to extract two types of novel temporal interaction cues. We then define a two-stage inference pipeline in which GRIP first outputs the consistent interaction motion by taking a new approach to modeling motion temporal consistency in the latent space. In the second stage, GRIP generates refined hand poses to avoid finger-to-object penetrations. Both quantitative experiments and perceptual studies demonstrate that GRIP outperforms existing methods and generalizes to unseen objects and motions. Furthermore, GRIP achieves real-time performance with 45 fps hand pose prediction. Our models and code will be available for research purposes.